PySpark: Dataframe Joins

This tutorial will explain various types of joins that are supported in Pyspark. It will also cover some challenges in joining 2 tables having same column names. Following topics will be covered on this page:

- Types of Joins

- Inner Join

- Left / leftouter / left_outer Join

- Right / rightouter / right_outer Join

- Outer / full / fullouter / full_outer Join

- Cross Join

- Semi / leftsemi / left_semi Join

- Anti / leftanti / leftanti Join

- Special Conditions and Challenges in Joins

➠ Join Syntax: Join function can take up to 3 parameters, 1st parameter is mandatory and other 2 are optional.

leftDataframe.join(otherDataframe, on=None, how=None)

- String for a column name if both dataframes have same named joining column.

- Single condition equating columns from 2 dataframes.

- List of conditions when specifying multiple condition for a join.

- If this parameter is not passed then join will perform cross join.

Sample Data: 2 different dataset will be used to explain joins and these data files can be downloaded from here(employee) , here(department), here(dataset 1) and here(dataset 2).

Data 1:

empdf=spark.read.parquet("file:///path_to_file/employee.parquet")

deptdf=spark.read.parquet("file:///path_to_file/department.parquet")

Data 2:

df_1 = spark.read.option("header",True).csv("file:///path_to_file/join_example_file_1.csv")

df_2 = spark.read.option("header",True).csv("file:///path_to_file/join_example_file_2.csv")

df_1.show()

+-----+---------+-------+

|db_id| db_name|db_type|

+-----+---------+-------+

| 12| Teradata| RDBMS|

| 14|Snowflake|CloudDB|

| 15| Vertica| RDBMS|

| 17| Oracle| RDBMS|

| 19| MongoDB| NOSQL|

+-----+---------+-------+

df_2.show()

+-----+-----------+-------+

|db_id| db_name|db_type|

+-----+-----------+-------+

| 17| Oracle| RDBMS|

| 19| MongoDB| NOSQL|

| 21|SingleStore| RDBMS|

| 22| Mysql| RDBMS|

| 14| Snowflake| RDBMS|

+-----+-----------+-------+

➠ Inner Join: Inner join can be used to return matched records based on common column(s) from the both dataframes. Inner join is the default join if 3rd parameter is not passed in the join function. Below image shows pictorial representation of inner join, only gray colored portion of data will be return out of 2 dataframes i.e. records which are common to both dataframes.

- Example 1:

df_1.join(df_2,"db_id").show() +-----+---------+-------+---------+-------+ |db_id| db_name|db_type| db_name|db_type| +-----+---------+-------+---------+-------+ | 14|Snowflake|CloudDB|Snowflake| RDBMS| | 17| Oracle| RDBMS| Oracle| RDBMS| | 19| MongoDB| NOSQL| MongoDB| NOSQL| +-----+---------+-------+---------+-------+

- Example 2:

df_1.join(df_2,df_1.db_id==df_2.db_id).show() +-----+---------+-------+-----+---------+-------+ |db_id| db_name|db_type|db_id| db_name|db_type| +-----+---------+-------+-----+---------+-------+ | 14|Snowflake|CloudDB| 14|Snowflake| RDBMS| | 17| Oracle| RDBMS| 17| Oracle| RDBMS| | 19| MongoDB| NOSQL| 19| MongoDB| NOSQL| +-----+---------+-------+-----+---------+-------+

- Example 3:

df_1.join(df_2,df_1.db_id==df_2.db_id,"inner").show() +-----+---------+-------+-----+---------+-------+ |db_id| db_name|db_type|db_id| db_name|db_type| +-----+---------+-------+-----+---------+-------+ | 14|Snowflake|CloudDB| 14|Snowflake| RDBMS| | 17| Oracle| RDBMS| 17| Oracle| RDBMS| | 19| MongoDB| NOSQL| 19| MongoDB| NOSQL| +-----+---------+-------+-----+---------+-------+

- Example 4:

empdf.join(deptdf,empdf.dept_no==deptdf.dept_no,"inner").show() +-------+--------+-------+-------+-------+---------------+---------+ | emp_no|emp_name| salary|dept_no|dept_no|department_name| loc_name| +-------+--------+-------+-------+-------+---------------+---------+ |1000245| PRADEEP|5000.00| 100| 100| ACCOUNTS| JAIPUR| |1000258| BLAKE|2850.00| 300| 300| SALES|BENGALURU| |1000262| CLARK|2450.00| 100| 100| ACCOUNTS| JAIPUR| |1000276| JONES|2975.00| 200| 200| R & D|NEW DELHI| |1000288| SCOTT|3000.00| 200| 200| R & D|NEW DELHI| |1000292| FORD|3000.00| 200| 200| R & D|NEW DELHI| |1000294| SMITH| 800.00| 200| 200| R & D|NEW DELHI| |1000299| ALLEN|1600.00| 300| 300| SALES|BENGALURU| |1000310| WARD|1250.00| 300| 300| SALES|BENGALURU| |1000312| MARTIN|1250.00| 300| 300| SALES|BENGALURU| |1000315| TURNER|1500.00| 300| 300| SALES|BENGALURU| |1000326| ADAMS|1100.00| 200| 200| R & D|NEW DELHI| |1000336| JAMES| 950.00| 300| 300| SALES|BENGALURU| |1000346| MILLER|1300.00| 100| 100| ACCOUNTS| JAIPUR| +-------+--------+-------+-------+-------+---------------+---------+

➠ Left / leftouter / left_outer Join: Left Outer join is used to return matched records from the right dataframe and matched/unmatched records from the left dataframe. Left, leftouter and left_outer Join are alias of each other. Below image shows pictorial representation of left outer join, only gray colored portion of data will be return out of 2 dataframes i.e. all data from the left dataframe and only matched data from the right dataframe.

- Example 1: Arrow at the end of row in the below examples represent that this row was only returned from left dataframe.

df_1.join(df_2,df_1.db_id==df_2.db_id,"left").show() +-----+---------+-------+-----+-------+-------+ |db_id| db_name|db_type|db_id|db_name|db_type| +-----+---------+-------+-----+-------+-------+ | 12| Teradata| RDBMS| null| null| null|<-- | 14|Snowflake|CloudDB| null| null| null|<-- | 15| Vertica| RDBMS| null| null| null|<-- | 17| Oracle| RDBMS| 17| Oracle| RDBMS| | 19| MongoDB| NOSQL| 19|MongoDB| NOSQL| +-----+---------+-------+-----+-------+-------+

- Example 2: Arrow at the end of row in the below examples represent that this row was only returned from left dataframe.

empdf.join(deptdf,empdf.dept_no==deptdf.dept_no,"left").show() +-------+--------+-------+-------+-------+---------------+---------+ | emp_no|emp_name| salary|dept_no|dept_no|department_name| loc_name| +-------+--------+-------+-------+-------+---------------+---------+ |1000245| PRADEEP|5000.00| 100| 100| ACCOUNTS| JAIPUR| |1000258| BLAKE|2850.00| 300| 300| SALES|BENGALURU| |1000262| CLARK|2450.00| 100| 100| ACCOUNTS| JAIPUR| |1000276| JONES|2975.00| 200| 200| R & D|NEW DELHI| |1000288| SCOTT|3000.00| 200| 200| R & D|NEW DELHI| |1000292| FORD|3000.00| 200| 200| R & D|NEW DELHI| |1000294| SMITH| 800.00| 200| 200| R & D|NEW DELHI| |1000299| ALLEN|1600.00| 300| 300| SALES|BENGALURU| |1000310| WARD|1250.00| 300| 300| SALES|BENGALURU| |1000312| MARTIN|1250.00| 300| 300| SALES|BENGALURU| |1000315| TURNER|1500.00| 300| 300| SALES|BENGALURU| |1000326| ADAMS|1100.00| 200| 200| R & D|NEW DELHI| |1000336| JAMES| 950.00| 300| 300| SALES|BENGALURU| |1000346| MILLER|1300.00| 100| 100| ACCOUNTS| JAIPUR| |1000347| DAVID|1400.00| 500| null| null| null|<-- +-------+--------+-------+-------+-------+---------------+---------+

➠ Right / rightouter / right_outer Join: Right Outer join is used to return matched records from the left dataframe and matched/unmatched records from the right dataframe. Right, rightouter and right_outer are alias of each other. Below image shows pictorial representation of right outer join, only gray colored portion of data will be return out of 2 dataframes i.e. all data from the right dataframe and only matched data from the left dataframe.

- Example 1: Arrow at the end of row in the below examples represent that this row was only returned from right dataframe.

df_1.join(df_2,df_1.db_id==df_2.db_id,"right").show() +-----+---------+-------+-----+-----------+-------+ |db_id| db_name|db_type|db_id| db_name|db_type| +-----+---------+-------+-----+-----------+-------+ | 17| Oracle| RDBMS| 17| Oracle| RDBMS| | 19| MongoDB| NOSQL| 19| MongoDB| NOSQL| | null| null| null| 21|SingleStore| RDBMS|<-- | null| null| null| 22| Mysql| RDBMS|<-- | 14|Snowflake|CloudDB| 14| Snowflake| RDBMS| +-----+---------+-------+-----+-----------+-------+

- Example 2: Arrow at the end of row in the below examples represent that this row was only returned from right dataframe.

empdf.join(deptdf,empdf.dept_no==deptdf.dept_no,"right").show() +-------+--------+-------+-------+-------+--------------------+-----------+ | emp_no|emp_name| salary|dept_no|dept_no| department_name| loc_name| +-------+--------+-------+-------+-------+--------------------+-----------+ |1000346| MILLER|1300.00| 100| 100| ACCOUNTS| JAIPUR| |1000262| CLARK|2450.00| 100| 100| ACCOUNTS| JAIPUR| |1000245| PRADEEP|5000.00| 100| 100| ACCOUNTS| JAIPUR| |1000326| ADAMS|1100.00| 200| 200| R & D| NEW DELHI| |1000294| SMITH| 800.00| 200| 200| R & D| NEW DELHI| |1000292| FORD|3000.00| 200| 200| R & D| NEW DELHI| |1000288| SCOTT|3000.00| 200| 200| R & D| NEW DELHI| |1000276| JONES|2975.00| 200| 200| R & D| NEW DELHI| |1000336| JAMES| 950.00| 300| 300| SALES| BENGALURU| |1000315| TURNER|1500.00| 300| 300| SALES| BENGALURU| |1000312| MARTIN|1250.00| 300| 300| SALES| BENGALURU| |1000310| WARD|1250.00| 300| 300| SALES| BENGALURU| |1000299| ALLEN|1600.00| 300| 300| SALES| BENGALURU| |1000258| BLAKE|2850.00| 300| 300| SALES| BENGALURU| | null| null| null| null| 400|INFORMATION TECHN...|BHUBANESWAR|<-- +-------+--------+-------+-------+-------+--------------------+-----------+

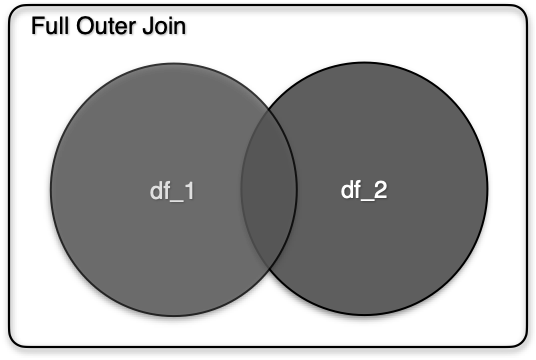

➠ Outer / full / fullouter / full_outer Join: Outer or Full_outer join is used to return all of the records that have values in either the left or right dataframe. Outer, full, fullouter and full_outer are alias of each other. Below image shows pictorial representation of full outer join, all gray colored portion of data will be return out of 2 dataframes i.e. all data from the right dataframe and the left dataframe.

- Example 1: Arrow at the end of row in the below examples represent that this row was either returned from right or left dataframe.

df_1.join(df_2,df_1.db_id==df_2.db_id,"full").show() +-----+---------+-------+-----+-----------+-------+ |db_id| db_name|db_type|db_id| db_name|db_type| +-----+---------+-------+-----+-----------+-------+ | 15| Vertica| RDBMS| null| null| null|<-- | null| null| null| 22| Mysql| RDBMS|<-- | 17| Oracle| RDBMS| 17| Oracle| RDBMS| | 19| MongoDB| NOSQL| 19| MongoDB| NOSQL| | 12| Teradata| RDBMS| null| null| null|<-- | 14|Snowflake|CloudDB| 14| Snowflake| RDBMS| | null| null| null| 21|SingleStore| RDBMS|<-- +-----+---------+-------+-----+-----------+-------+

- Example 2: Arrow at the end of row in the below examples represent that this row was either returned from right or left dataframe.

empdf.join(deptdf,empdf.dept_no==deptdf.dept_no,"full").show() +-------+--------+-------+-------+-------+--------------------+-----------+ | emp_no|emp_name| salary|dept_no|dept_no| department_name| loc_name| +-------+--------+-------+-------+-------+--------------------+-----------+ |1000258| BLAKE|2850.00| 300| 300| SALES| BENGALURU| |1000299| ALLEN|1600.00| 300| 300| SALES| BENGALURU| |1000310| WARD|1250.00| 300| 300| SALES| BENGALURU| |1000312| MARTIN|1250.00| 300| 300| SALES| BENGALURU| |1000315| TURNER|1500.00| 300| 300| SALES| BENGALURU| |1000336| JAMES| 950.00| 300| 300| SALES| BENGALURU| |1000347| DAVID|1400.00| 500| null| null| null|<-- |1000245| PRADEEP|5000.00| 100| 100| ACCOUNTS| JAIPUR| |1000262| CLARK|2450.00| 100| 100| ACCOUNTS| JAIPUR| |1000346| MILLER|1300.00| 100| 100| ACCOUNTS| JAIPUR| | null| null| null| null| 400|INFORMATION TECHN...|BHUBANESWAR|<-- |1000276| JONES|2975.00| 200| 200| R & D| NEW DELHI| |1000288| SCOTT|3000.00| 200| 200| R & D| NEW DELHI| |1000292| FORD|3000.00| 200| 200| R & D| NEW DELHI| |1000294| SMITH| 800.00| 200| 200| R & D| NEW DELHI| |1000326| ADAMS|1100.00| 200| 200| R & D| NEW DELHI| +-------+--------+-------+-------+-------+--------------------+-----------+

➠ Cross Join: This join will returns the cartesian product of rows from the dataframes in the join. Cross join will happen when joining condition or column are not specified.

- Example 1:

df_1.join(df_2).show() +-----+---------+-------+-----+-----------+-------+ |db_id| db_name|db_type|db_id| db_name|db_type| +-----+---------+-------+-----+-----------+-------+ | 12| Teradata| RDBMS| 17| Oracle| RDBMS| | 12| Teradata| RDBMS| 19| MongoDB| NOSQL| | 12| Teradata| RDBMS| 21|SingleStore| RDBMS| | 12| Teradata| RDBMS| 22| Mysql| RDBMS| | 14|Snowflake|CloudDB| 17| Oracle| RDBMS| . . . | 19| MongoDB| NOSQL| 22| Mysql| RDBMS| +-----+---------+-------+-----+-----------+-------+

df_1.crossJoin(df_2).show() +-----+---------+-------+-----+-----------+-------+ |db_id| db_name|db_type|db_id| db_name|db_type| +-----+---------+-------+-----+-----------+-------+ | 12| Teradata| RDBMS| 17| Oracle| RDBMS| | 12| Teradata| RDBMS| 19| MongoDB| NOSQL| | 12| Teradata| RDBMS| 21|SingleStore| RDBMS| | 12| Teradata| RDBMS| 22| Mysql| RDBMS| | 14|Snowflake|CloudDB| 17| Oracle| RDBMS| . . . | 19| MongoDB| NOSQL| 22| Mysql| RDBMS| +-----+---------+-------+-----+-----------+-------+

- Example 3:

empdf.join(deptdf).orderBy("emp_no").show() +-------+--------+-------+-------+-------+--------------------+-----------+ | emp_no|emp_name| salary|dept_no|dept_no| department_name| loc_name| +-------+--------+-------+-------+-------+--------------------+-----------+ |1000245| PRADEEP|5000.00| 100| 300| SALES| BENGALURU| |1000245| PRADEEP|5000.00| 100| 400|INFORMATION TECHN...|BHUBANESWAR| |1000245| PRADEEP|5000.00| 100| 100| ACCOUNTS| JAIPUR| |1000245| PRADEEP|5000.00| 100| 200| R & D| NEW DELHI| |1000258| BLAKE|2850.00| 300| 100| ACCOUNTS| JAIPUR| |1000258| BLAKE|2850.00| 300| 200| R & D| NEW DELHI| |1000258| BLAKE|2850.00| 300| 300| SALES| BENGALURU| |1000258| BLAKE|2850.00| 300| 400|INFORMATION TECHN...|BHUBANESWAR| |1000262| CLARK|2450.00| 100| 400|INFORMATION TECHN...|BHUBANESWAR| |1000262| CLARK|2450.00| 100| 200| R & D| NEW DELHI| . . . |1000288| SCOTT|3000.00| 200| 400|INFORMATION TECHN...|BHUBANESWAR| +-------+--------+-------+-------+-------+--------------------+-----------+

➠ Semi / leftsemi / left_semi Join: This join is similar to inner join but it will not return data / fields from right dataframe. leftsemi, left_semi and semi are alias of each other.

- Example 1:

df_1.join(df_2,df_1.db_id==df_2.db_id,"semi").show() +-----+---------+-------+ |db_id| db_name|db_type| +-----+---------+-------+ | 14|Snowflake|CloudDB| | 17| Oracle| RDBMS| | 19| MongoDB| NOSQL| +-----+---------+-------+

- Example 2:

empdf.join(deptdf,empdf.dept_no==deptdf.dept_no,"semi").show() +-------+--------+-------+-------+ | emp_no|emp_name| salary|dept_no| +-------+--------+-------+-------+ |1000245| PRADEEP|5000.00| 100| |1000258| BLAKE|2850.00| 300| |1000262| CLARK|2450.00| 100| |1000276| JONES|2975.00| 200| |1000288| SCOTT|3000.00| 200| |1000292| FORD|3000.00| 200| |1000294| SMITH| 800.00| 200| |1000299| ALLEN|1600.00| 300| |1000310| WARD|1250.00| 300| |1000312| MARTIN|1250.00| 300| |1000315| TURNER|1500.00| 300| |1000326| ADAMS|1100.00| 200| |1000336| JAMES| 950.00| 300| |1000346| MILLER|1300.00| 100| +-------+--------+-------+-------+

➠ Anti / leftanti / leftanti Join: This join is a kind of joined minus and it will also not return data from right dataframe. It will return rows where join column value is not present in right dataframe. Anti, leftanti and leftanti are alias of each other. Below image shows pictorial representation of Anti join in spark, only gray colored portion of data will be return after anti join of 2 dataframes i.e. records from the left dataframe which are not common to the right dataframe.

Example: In the below example, since join on db_id 12, 15 is failing therefore these rows from left dataframe were returned.

df_1.join(df_2,df_1.db_id==df_2.db_id,"anti").show()

+-----+---------+-------+

|db_id| db_name|db_type|

+-----+---------+-------+

| 12| Teradata| RDBMS|

| 15| Vertica| RDBMS|

+-----+---------+-------+

➠ Special Conditions and Challenges in Joins

- Join on same column name: When same column name is passed as string from both dataframes then it will only return single joining column in final dataframe output.

df_1.join(df_2,"db_id","left").show() +-----+-------+-------+-------+-------+ |db_id|db_name|db_type|db_name|db_type| +-----+-------+-------+-------+-------+ | 17| Oracle| RDBMS| Oracle| RDBMS| | 19|MongoDB| NOSQL|MongoDB| NOSQL| +-----+-------+-------+-------+-------+

- Join on multiple columns: Multiple columns can be used to join two dataframes. If multiple conditions are required for a join then it must be passed as a list of conditions as shown in the example below.

df_1.join(df_2,[df_1.db_id==df_2.db_id, df_1.db_name==df_2.db_name],"inner").show() +-----+---------+-------+-----+---------+-------+ |db_id| db_name|db_type|db_id| db_name|db_type| +-----+---------+-------+-----+---------+-------+ | 14|Snowflake|CloudDB| 14|Snowflake| RDBMS| | 17| Oracle| RDBMS| 17| Oracle| RDBMS| | 19| MongoDB| NOSQL| 19| MongoDB| NOSQL| +-----+---------+-------+-----+---------+-------+

- Join with not equal to condition: Multiple columns can be used to join two dataframes and exclusions can be added using not equal to condition(s). If multiple conditions are required for a join then it must be passed as a list of conditions as shown in the example below.

df_1.join(df_2,[df_1.db_id==df_2.db_id, df_1.db_type!=df_2.db_type],"inner").show() +-----+---------+-------+-----+---------+-------+ |db_id| db_name|db_type|db_id| db_name|db_type| +-----+---------+-------+-----+---------+-------+ | 14|Snowflake|CloudDB| 14|Snowflake| RDBMS| +-----+---------+-------+-----+---------+-------+

- Join on column having different name: Sometime same column is defined with different names in multiple dataframes. Dataframe aliases can be used as reference to pass join ON column as condition.

--Renaming all columns to append df_ as prefix import pyspark.sql.functions as f df_2_1 = df_2.select([f.col(column).alias("df_"+column) for column in df_2.columns]) df_2_1.show() +--------+-----------+----------+ |df_db_id| df_db_name|df_db_type| +--------+-----------+----------+ | 17| Oracle| RDBMS| | 19| MongoDB| NOSQL| | 21|SingleStore| RDBMS| | 22| Mysql| RDBMS| | 14| Snowflake| RDBMS| +--------+-----------+----------+ df_1.join(df_2_1,df_1.db_id==df_2_1.df_db_id,"inner").show() +-----+---------+-------+--------+----------+----------+ |db_id| db_name|db_type|df_db_id|df_db_name|df_db_type| +-----+---------+-------+--------+----------+----------+ | 14|Snowflake|CloudDB| 14| Snowflake| RDBMS| | 17| Oracle| RDBMS| 17| Oracle| RDBMS| | 19| MongoDB| NOSQL| 19| MongoDB| NOSQL| +-----+---------+-------+--------+----------+----------+

- Access same named columns after join: When same named columns are present in dataframe after join then those columns cannot be directly queried with name. This type of cases need to be handled differently, here we will explain 2 approaches to resolve this.

- If users will try to select those columns directly then it will fail with error "Reference 'column_name' is ambiguous" as shown in the below example.

joined_df = df_1.join(df_2,[df_1.db_id==df_2.db_id, df_1.db_type!=df_2.db_type],"inner") joined_df.printSchema() root |-- db_id: string (nullable = true) |-- db_name: string (nullable = true) |-- db_type: string (nullable = true) |-- db_id: string (nullable = true) |-- db_name: string (nullable = true) |-- db_type: string (nullable = true) joined_df.select("db_type").show() Output: pyspark.sql.utils.AnalysisException: Reference 'db_type' is ambiguous, could be: db_name, db_name.;

- One way to resolve this issue is to always reference pre-joined dataframe aliases to reference the column. In the below example, pre-joined dataframe aliases df_1 & df_2 are used to fetch same named column db_type.

joined_df = df_1.join(df_2,[df_1.db_id==df_2.db_id, df_1.db_type!=df_2.db_type],"inner") joined_df.select(df_2.db_type, df_1.db_type).show() Output: +-------+-------+ |db_type|db_type| +-------+-------+ | RDBMS|CloudDB| +-------+-------+

- Other way is to rename the columns in second dataframe before join so that there is no naming conflict after join and any column can be referenced without any need to specify pre-joined dataframe aliases.

Python list comprehension is used to rename all the columns of a dataframe, you can visit this page to learn more about List comprehension.

--Renaming all columns to append df_ as prefix import pyspark.sql.functions as f df_2_renamed = df_2.select([f.col(column).alias("df_"+column) for column in df_2.columns]) df_2_renamed.show() +--------+-----------+----------+ |df_db_id| df_db_name|df_db_type|<-- +--------+-----------+----------+ | 17| Oracle| RDBMS| | 19| MongoDB| NOSQL| | 21|SingleStore| RDBMS| | 22| Mysql| RDBMS| | 14| Snowflake| RDBMS| +--------+-----------+----------+ joined_df = df_1.join(df_2_renamed,[ df_1.db_id == df_2_renamed.df_db_id, df_1.db_type!=df_2_renamed.df_db_type],"inner") joined_df.select("db_type","df_db_type").show() +-------+----------+ |db_type|df_db_type| +-------+----------+ |CloudDB| RDBMS| +-------+----------+

- If users will try to select those columns directly then it will fail with error "Reference 'column_name' is ambiguous" as shown in the below example.